Haripriya Harikumar

Research Fellow. Centre for AI Fundamentals, Department of Computer Science. University of Manchester. UK.

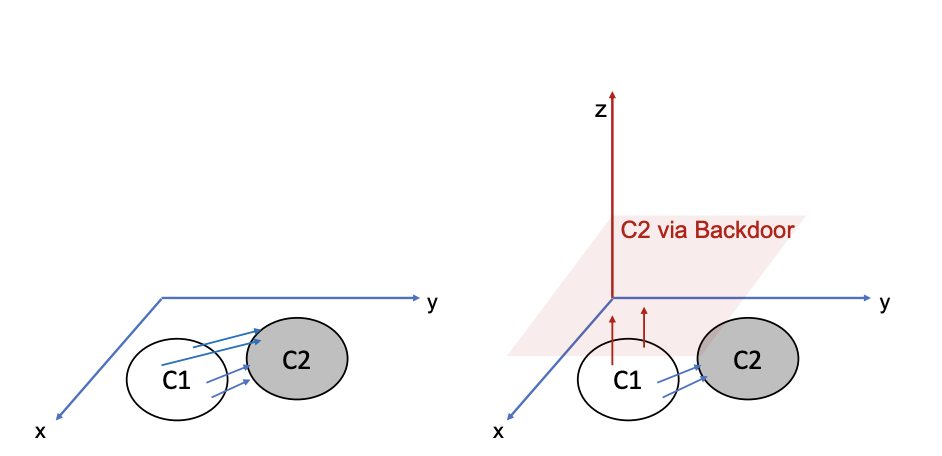

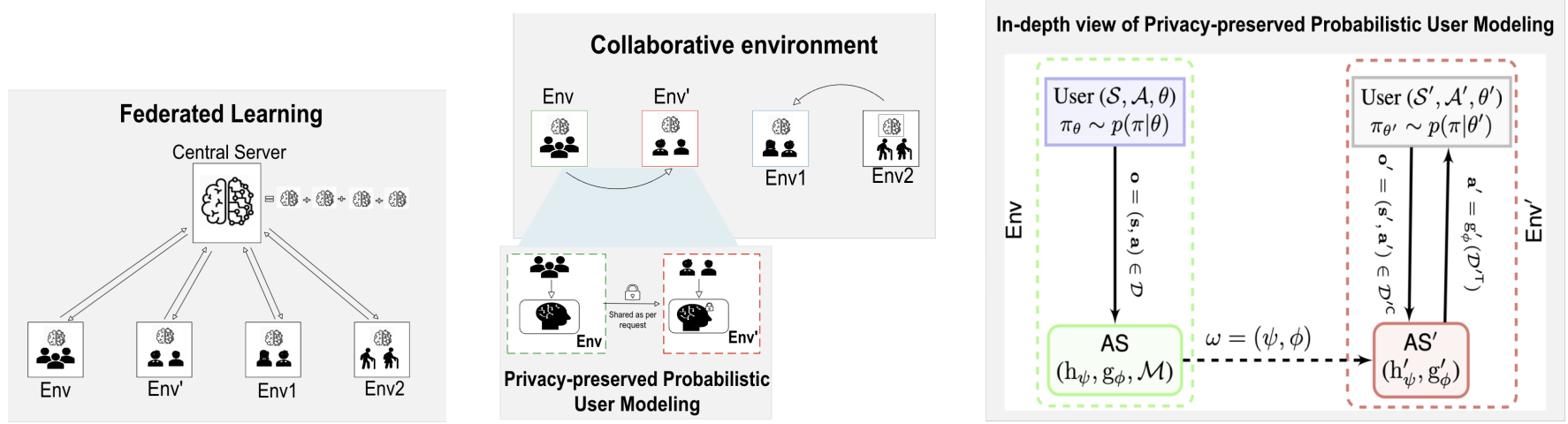

Artificial Intelligence systems are inherently vulnerable to a range of adversarial threats, including adversarial and backdoor attacks. My research centers on developing mechanisms for AI Safety and Security, spanning both fundamental theory and practical deployment. I focus on ensuring that AI systems remain secure, trustworthy, and robust, particularly in adversarial or malicious settings. This includes designing methods that preserve the privacy of sensitive data and enable reliable predictions and safe decision-making under adversarial threats and distribution shift. I am also deeply interested in the underlying principles of out-of-distribution detection, data manifold learning, machine unlearning and the robustness of Language Vision Models and Large Language Models. I aim to make AI-driven technologies, such as healthcare tools, online platforms, and autonomous systems, more reliable and safer for everyone to use.

news

| Nov 26, 2025 | Attended the Turing AI Fellowship Annual Event 2025 at The Royal Society, London! |

|---|---|

| Aug 04, 2025 | Awarded a travel grant from European Lighthouse on Secure and Safe AI funds (ELSA) to attend a CISPA-ELLIS-Summer School 2025 in Trustworthy AI (August 4-8, 2025) |

| Jul 20, 2025 | Attended and presented poster at Uncertainty in Artificial Intelligence (UAI) Conference (Rank A*) in Rio, Brazil! |

| Oct 04, 2024 | Awarded Global Talent Visa to join University of Manchester! |

| Dec 05, 2023 | Co-organized NeurIPS Workshop |

selected publications

- Defense Against Multi-target Multi-trigger Backdoor Attacks2025